The aim of this article is to briefly explain how to play a short video (in terms of sequence of frames) using the LandtTiger LPC1768 board and the connected ILI9325 LCD screen (ILI9320 on some board).

First of all we need to find out a way to convert an input video in a compatible format. In order to do that, the most direct way – and the one we will take – is to extract a sequence of frames from it and then print those extracted frames to the screen, with specific delay constraints.

Extracting frames

For this purpose the choice fell on ffmpeg – an extremely powerful tool that converts and edits audio and video streams.

After installing it, let’s extract a resized portion of the input video

ffmpeg -i INPUT_VIDEO -vf scale=”-2:HEIGHT,crop=WIDTH:HEIGHT” -ss START_TIME -t DURATION “VIDEO_NAME_cut.mp4”

where the non-obvious parameter are WIDTH and HEIGHT since:

- in the first part you can omit one of the two (by replacing with a -1 or -2 as appropriate)

- in the crop part they must be consistent with the scaled down size above specified

Now let’s actually extract the frames

ffmpeg -i “VIDEO_NAME_cut.mp4” -vf fps=1/FRAME_OFFSET -vcodec rawvideo -f rawvideo -pix_fmt rgb565be -f image2 ./$filename%03d.raw

I will explain in detail some of the arguments of the previous command:

- fps=1/FRAME_OFFSET -> “take a frame from source every FRAME_OFFSET seconds”

- rawvideo -> no header inserted, only pixel representation

- -pix_fmt rgb565be -> the flow of data from the Board to the LCD is – #in theory – 16-bit based. What we are asking here is “I want every pixel to be represented in RGB 16 bit convention – Big Endian” [more here]

- output frames will be saved with the .raw extension and with increasing numbers in the filename

Image conversion

Now that we have in our folder a bunch of .raw frames, the next step is to convert them into a C-like structure, that will be imported into our project.

As shown in a previous Special Project (thanks to Gianni Cito), the free and open source image editor GIMP comes to our rescue since it has “Source Code” as output format for our input image. Unfortunally – unless we master the GIMP’s Script–Fu scripting language – this is a time-consuming operation and does not allow us to convert multiple images all at once.

We already have, instead, a partial conversion, i.e. a flow of bytes that can be converted in a C array with the following commands

find . -name “*.raw” | xargs -r -I{} cat “{}” > video_merged

xxd -i video_merged > array.h

The former one merges all the frames into a big file – that somehow represents our video – while the latter makes a dump of the input file and finally generates the .h array!

Ok.. and now?

After this long conversion phase, what we have is an array of unsigned char[] 16-bit value and we want to make it a const char[] one.

Why const?

Because the LandTiger LPC1768 has 512kB of Read-Only flash memory – which is a relatively large amount of memory – that we can use instead of filling the code memory.

How does it work?

- Import the converted .h array

- Create a video object from it

- Set the appropriate RIT interval value

- Enable RIT

You can find the code, the implementation details and an explaination of the import operations on the GitLab repository.

More about timing

In point 3) I said that we must find a proper value for the RIT initialization. A strict timing must be respected in order to obtain an acceptable video playback.

First of all, we need to wait a certain number of milliseconds between two consecutive frames, given by input video’s fps.

For example, a 25fps video will lead to a 40ms delay between frames.

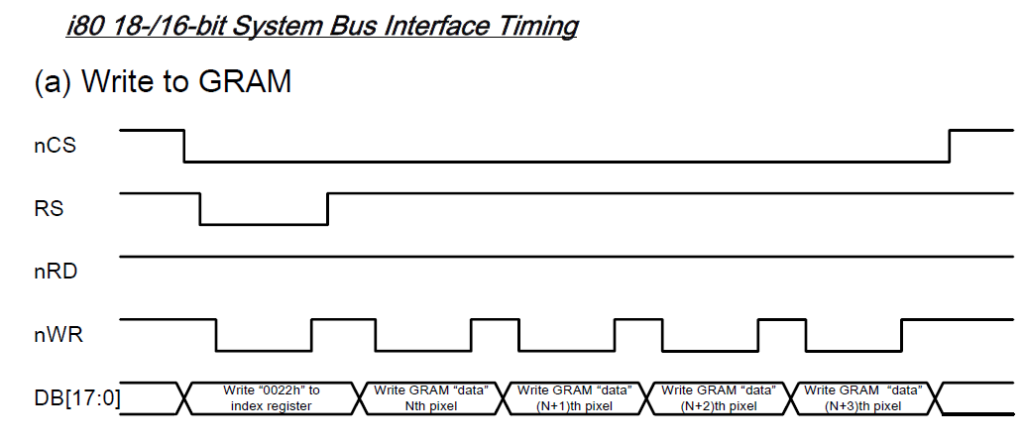

Then, we need a fast way of transferring the frame’s byte stream to the LCD. The GLCD library comes with a LCD_SetPoint function, which accepts the X-Y coordinates and the 16-bit color value as parameters, but it is too slow for video playback purposes! In fact, what it does is to perform three writes to the LCD, two for the indexes selection (to LCD’s registers R20h and R21h) and one for the output RGB value (R22h).

Please note that each write actually hides a double write to LCD (one to the IR index register and one with the actual value). Furthermore, although the ILI932X LCD contemplates a 16-bit interface, the way it is connected to this board does only allow a 8-bit transfer (only 8 out of 16 pins connected).

Definitely, we need to dig into the LCD manual in order to find a faster (and more constant in terms of delays) way of transferring pixels.

Luckily, ILI932x LCD offers a Window Address Area mode, that allows the user to select a subset of the screen. By writing to the R50-53h registers we set the four corners coordinates. Finally, we can send all the frame’s pixels by performing a single index register write (R22h: data transfer) and a flow of pixels transfer. Refer to the following picture

LCD_SetPoint we would have needed at least 5 writes between each consecutive pixel (not taking into account the 16-bit interface problem)Showcase

In conclusion

A few other words to summarize our results and outline some open problems.

We’ve managed to display a short sequence of frames so as to give us the feeling of a video. The conversion sequence is automatic so that we can start it and import everything inside our project.

You are supposed to launch the commands on a Unix/Linux distribution, even if there are tools that make them available on Windows too.

✅Achieved goals

- Video playback

- Convert input video

- Chance of zooming in the image (there will be losses on quality though)

❌ Limitations

- Unfortunately, the 512kB flash memory is big enough to only fit small informations (basically a sequence of some dozens of 50×50 px frames)

- Long Board flashing times

- Complex inclusion operations: we need to include the headers and re-compile every time

📽️ We have overcome these limitations with our improved Audio Visual player, in which the SD card is used to load any pre-converted video and a – – somehow complex and tailored – synchronization between audio and video allows the correct playback (in a slightly higher resolution)!

Federico Bitondo, s276294

Prof. Paolo Bernardi