The objective of this project is to extend a previous media player project, based on a custom video format, in order to support standard video files. Since the MP4 standard defines a container which can support a large group of video and audio formats, it is extremely important to select the right ones to include in the project, keeping in mind the low CPU performance (100MHz on a single core) and the limited memory available (only 64KB of RAM). The simplest video codec that can be encapsulated in an MP4 is Motion JPEG, literally formed of a sequence of JPEG images. This format, while not efficient in terms of size, is incredibly fast to decode and only needs enough RAM to store a single frame at a time (or even less, depending on JPEG decoding library). For audio the best solution is FLAC encoding, a lossless format that only requires fixed point arithmetic. Both audio and video quality need to be kept low, since the screen cannot reproduce video larger than about 128×64 pixels and the sound board has a limited depth of 10 bits per sample.

MP4 file structure

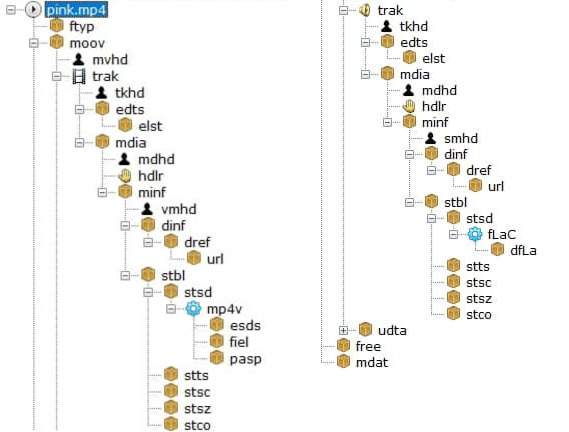

MP4 files are composed of a sequence of data containers, called atoms, organized in a tree structure.

The moov atom contains all information needed by a decoder to find and decode a track’s samples, such as offset from the beginning of the file, size, amount of samples per chunk of data, resolution, and audio sample depth.

Structure of an MP4 file

This information is split between 4 different tables for each track. Due the lack of RAM available on the board, no table can be fully loaded into memory: they can only be read on demand.

Since moving a file pointer backwards is an extremely slow operation on a FAT partition, 4 different file pointers are needed, 2 for each track, because there are 2 tables of information needed in real time during decoding. This action helped because the two tables are very far from each other, using the same pointer would have involved a continuous back and forward movement of the same with a massive drop in performance.

Encoded data is stored inside the mdat section, with each track split into multiple interleaved chunks.

Video and audio conversion

To convert a video to the supported MP4 version ffmpeg was used, a powerful open source tool, using the following command:

ffmpeg -i INPUT_VIDEO_FILE -vcodec mjpeg -acodec flac -filter:v fps=24,scale=128:64 -strict -2 -ac 1 -sample_fmt s16 -ar 16000 -movflags +faststart OUTPUT.MP4

Arguments

-vcodec mjpeg: select Motion JPEG as video encoding-acodec flac: select FLAC as audio encoding-filter:vspecifies a list of filters to apply to the video streamfps=24: resample the video stream to 24 frames per second (higher values risk not being able to be reproduced in real time, depending on video resolution, since the screen interface is extremely slow. See http://cas.polito.it/NXP-LANDTIGER@PoliTo-University/?p=225 for further explanation )scale=128:64: resize the video to 128×64 pixels (both axes must be multiples of 8, due to the limitations of the decoding library and a total number of pixel greater then 128×64 can generate frame delay)

-ac 1: merge all audio channels into 1, since the board only has one speakersample_fmt s16: reduce the audio sample depth to 16bit (the 6 lower bits are discarded during audio playback but ffmpeg simply can’t generate 10 bit samples)-ar 16000: resample the audio stream to 16000 samples per second (setting it higher than 16000 values may need a buffer resize)-movflags +faststart: write themoovatom at the start of the file in order to make data table reading faster.

Asynchronous reading and reproduction

In a classic media player on a computer, synchronization is generally achieved thanks to a multi thread/process architecture. The LPC1768 is a single core microcontroller, so neither multithreading nor multiprocessing are available, replaced by an asynchronous paradigm based on 4 different timers and a priority system between handlers.

All 4 available timers are used in the project, with the following priority levels from high to low:

- Reproduce the next audio sample, either 44100, 16000 or 8000 times per second depending on the sample rate

- Decode and reproduce a video sample, 24 times per second (or lower, depending on video encoding)

- Read more data (different timers, same priority)

- Fill one of the 2 audio buffer if empty (1/50 the frequency of time 1, about the time to play 50 audio sample)

- Fill video buffer if empty (2x the frequency of timer 2, just to be sure there is always data to process)

The priority value is based on how much a delayed execution would affect the user experience. An audio sample being reproduced for too long would create a distorted and incomprehensible sound, while a slightly lower video update wouldn’t pose a problem.

Conclusion

This kind of MP4 file achieves a compression ratio of about 8:1 compared to the raw file used in the previous version of this project, while keeping the same audio quality and a slightly lower video quality (JPEG compression can generate some video artifacts). While the software can theoretically handle a higher resolution (up to 320×240, full screen resolution), the screen interface is extremely slow, resulting in noticeable video delays for video sizes bigger than 128×64.

Example: https://youtu.be/EjUpucxNXsM